STRUCTURES Blog > Posts > Diffusion Models and Beyond: Exploring the Intersection of Physics and Machine Learning

Diffusion Models and Beyond: Exploring the Intersection of Physics and Machine Learning

Generative Models: The Art of Data Mimicry

Picture a talented artist, able to skillfully imitate the style of famous works of art. This artist can study the works of Van Gogh or Monet and produce never-before-seen paintings that have the same look and feel. Maybe you’ve heard of Midjourney, DALL-E or Stable Diffusion? They do something similar, turning a prompt of simple text into richly detailed images, spanning widely across the range of human creativity. The technology underlying these incredible tools, the skilled artists of the machine learning world, is known as generative modeling.

Generative models are a special class of machine learning algorithms that strive to learn the underlying structure and patterns within a dataset. By modeling these relationships, they can generate new samples that closely resemble the original data. Think of these models as creative apprentices, absorbing the essence of their training data and producing original works that echo the characteristics of everything they’ve studied.

There are several types of generative models, each with its unique approach to learning and generating data, such as variational autoencoders (VAEs), generative adversarial networks (GANs) and denoising diffusion models. The latter type of model, a relatively recent addition to the family of generative models, has been rapidly adopted due to its ability to generate highly realistic images. Let’s dive into what makes that possible.

Diffusion: A Dance of Particles

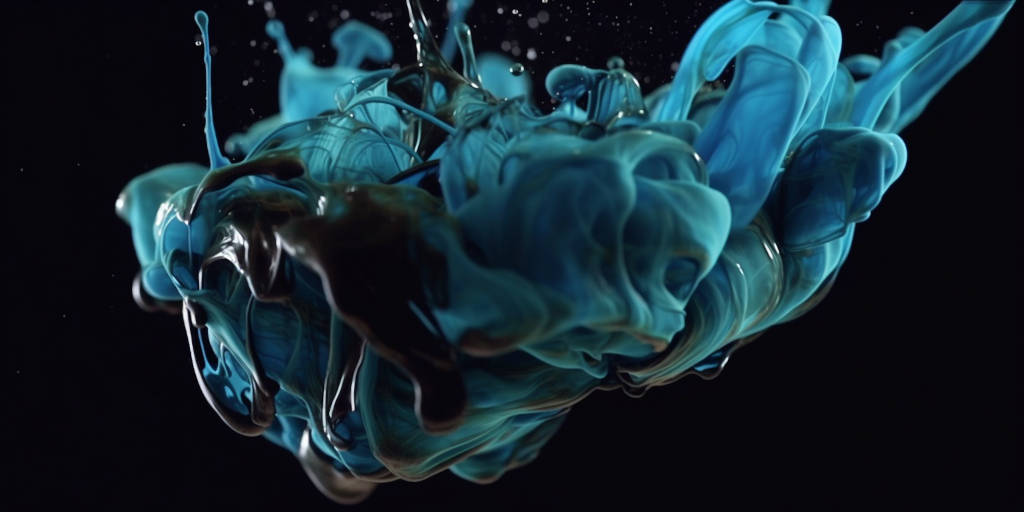

To understand denoising diffusion models, we need to touch on the concept of diffusion processes. Picture a drop of ink falling into a glass of water. At first, the ink forms a small, concentrated spot. But as time passes, the ink particles disperse throughout the water, spreading and swirling in a mesmerizing dance. This natural phenomenon, where particles move from areas of high concentration to low concentration, is known as diffusion.

One important property of diffusion processes is that over time the results look the same, no matter what the initial conditions were. This means that if we could somehow reverse the dynamics of diffusion, we could recover the initial conditions by starting from the predictable, smeared out final state and running the process backwards in time. It’s like watching the ink dance in reverse: starting with a chaotic mixture of particles and gradually reassembling them into a coherent, recognizable pattern. This is exactly the process by which diffusion models generate data.

Reversing the Dance: Training Diffusion Models

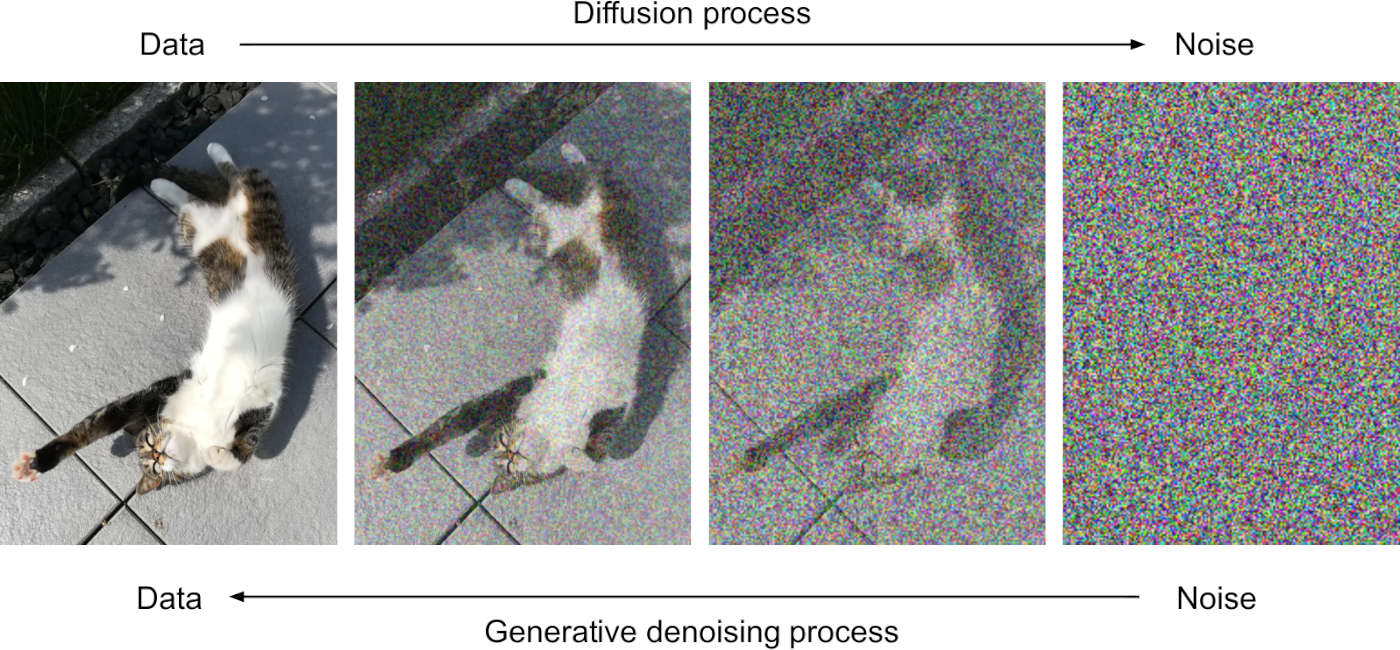

Training a diffusion model means teaching it to reverse the diffusion process by taking noisy data and reconstructing the original sample. To do this, the model needs to learn a series of denoising steps that will gradually reduce the noise and recover the training sample. This is achieved using neural networks with a carefully designed loss function. Let’s break down the steps involved in training a diffusion model.

-

Creating a Diffusion Process: The first step is to simulate a diffusion process with the original dataset. This is done by adding noise to the data samples at each timestep. The noise gradually increases until the samples are completely unrecognizable. The model must then learn to denoise the samples at each timestep, transforming them back into their original, noise-free state.

-

Denoising Neural Networks: To learn the denoising steps, a neural network is used. This neural network takes in the noisy samples from each timestep and tries to predict the noise-free data. In the case of image generation, for example, the neural network would take in a noisy image and output a cleaner version of the image, with slightly less noise.

-

Loss Function: During training, the model needs to know how well it is denoising the data at each timestep. This is achieved using a loss function, which measures the difference between the model’s predictions and the true noise-free samples. By minimizing the loss, the model learns to generate cleaner, more accurate reconstructions.

-

Training Iterations: The training process involves iterating through the dataset multiple times, presenting the model with various noisy versions of the samples. At each iteration, the model learns to predict the noise-free data more accurately, refining its denoising capabilities. The learning occurs by changing the neural network parameters in such a way that the loss function decreases (via gradient descent). This iterative process continues until the model converges, meaning its performance has reached a level at which it is no longer improving.

-

Generation: Once the model is trained, it can be used to generate new, original samples that closely resemble the training data. This is achieved by running the diffusion process in reverse, starting with a noisy sample and gradually denoising it using the trained neural network.

Bridging Worlds: The Synergy of Physics and Machine Learning

Denoising diffusion models demonstrate the power of interdisciplinary collaboration, showing how concepts from physics can be beneficial in machine learning. By combining the principles of diffusion processes with neural networks, diffusion models have made it possible to generate highly realistic data samples, rapidly becoming the preferred method for image generation.

This fusion of physics and machine learning is not an isolated instance; there are many other examples of their fruitful interaction:

-

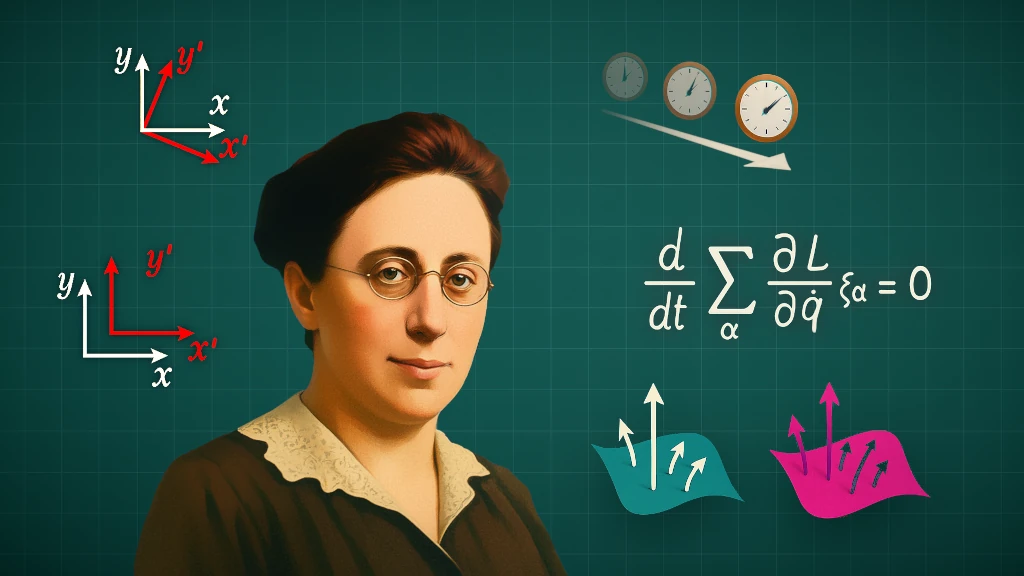

Ising Model and Boltzmann Machines: The Ising model is a statistical physics model originally created to explain the behaviour of ferromagnets. It inspired the development of Boltzmann machines, an early example of a generative model.

-

Quantum Machine Learning: This field brings together quantum computing and machine learning to develop quantum algorithms that attempt to solve complex optimization problems more efficiently than classical algorithms. The field is still in its infancy, and the practical advantages are still unclear, but if successful quantum machine learning could potentially speed up many standard machine learning tasks.

-

WaveNet: A deep generative model inspired by the physics of sound wave propagation, WaveNet excels in generating realistic audio signals, especially human speech.

-

Neural Ordinary Differential Equations (Neural ODEs): These models incorporate ordinary differential equations (ODEs) – a fundamental concept in physics – into neural networks. Neural ODEs can be used for many standard machine learning tasks, such as classification and generation, as well as for learning dynamical equations from time-series data.

-

Invariant and Equivariant Networks: In many physical systems, certain properties remain unchanged under specific transformations, such as rotations or translations. Neural networks that are designed to be invariant or equivariant to these transformations can learn more efficiently and generalize better. For instance, Convolutional Neural Networks (CNNs) exhibit translational equivariance, making them well-suited for image processing tasks. Recent advances in graph neural networks and geometric deep learning have led to the development of more sophisticated invariant and equivariant architectures, which are particularly useful in applications involving 3D structures, such as molecule or protein analysis.

The interaction between physics and machine learning highlights the potential of the kind of interdisciplinary collaboration that occurs within STRUCTURES. By combining the knowledge and insights from multiple fields, researchers can develop more powerful models that push the boundaries of what machine learning can achieve. This synergy not only benefits machine learning practitioners but also opens up new avenues of exploration for physicists and other scientists, ultimately contributing to our understanding of the world and its underlying principles.

Note: Images without sources were generated by Midjourney v5, using the following prompts:

-

A dynamic and engaging scene depicting a versatile artist standing proudly in front of an easel adorned with multiple canvases, each showcasing a unique painting meticulously crafted in the distinctive style of a renowned artist such as Van Gogh, Monet, Picasso, or Pollock. The composition highlights the artist’s exceptional talent, celebrating their ability to capture the essence of various art movements, techniques, and styles, while paying homage to the great masters in a visually captivating manner.

-

A captivating close-up of a single drop of ink as it elegantly diffuses into a crystal-clear glass of water, forming an intricate and mesmerizing dance of swirling patterns, rich gradients, and ethereal beauty, slow-motion capture, 8k ultra HD, inspired by the fluid dynamics photography of Fabian Oefner.

-

A thought-provoking and visually striking illustration representing the synergy of physics and machine learning, featuring interconnected networks of neurons and fundamental particles, seamlessly blending into each other, symbolizing the powerful collaboration between these two disciplines. The image uses bold colors and intricate patterns inspired by the works of M.C. Escher and Bridget Riley, encapsulating the infinite potential of combining scientific understanding with cutting-edge artificial intelligence in a captivating and innovative manner.

These prompts were in turn generated by ChatGPT after being shown examples of popular Midjourney prompts found at https://www.midjourney.com/showcase/top/.

Tags:

Machine Learning

Generative Models

Neural Networks

Diffusion

Differential Equations

Physics

Computation